selenium爬取微博评论,点赞数,点赞时间

程序员文章站

2022-05-02 20:42:18

...

import time,re

from selenium import webdriver

from selenium.webdriver.support.ui import WebDriverWait

import time

import pandas as pd

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.common.by import By

driver = webdriver.Chrome()

driver.get("https://login.sina.com.cn/signup/signin.php?")

# 60秒内输入完用户名和密码

time.sleep(50)

content_list = []

url_list = []

# 获取所有的url地址

for page in range(1,50):

driver.get("https://s.weibo.com/weibo/%25E6%2596%25B9%25E6%25B4%258B%25E6%25B4%258B%25E5%25AE%25B6%25E6%259A%25B4?q=%E6%96%B9%E6%B4%8B%E6%B4%8B%E5%AE%B6%E6%9A%B4&typeall=1&suball=1×cope=custom:2020-11-18-1:2020-12-18-23&Refer=g&page={}".format(page))

time.sleep(6)

for i3 in range(20):

i3 += 1

# 这个是点击单个评论

try:

ret2 = driver.find_element_by_xpath("//*[@id='pl_feedlist_index']/div[2]/div[{}]/div/div[1]/div[2]/div[2]/div[2]/div/div/ul/li[2]/a".format(i3))

if len(ret2.text) > 2:

print(ret2.text)

url1 = driver.find_element_by_xpath("//*[@id='pl_feedlist_index']/div[2]/div[{}]/div/div[1]/div[2]/div[2]/div[2]/div/div/ul/li[2]/a".format(i3)).get_attribute("href")

url2 = url1 + "&type=comment"

print(url2)

url_list.append(url2)

except Exception as e:

pass

'''循环操作,获取剩余页数的数据'''

for i2 in url_list:

driver.get(i2)

time.sleep(5)

i = 1

while i:

try:

element = WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.CLASS_NAME, 'more_txt')))

time.sleep(3)

driver.find_element_by_xpath("//*[@class='list_ul']//a/span[@class='more_txt']").click()

time.sleep(3)

except Exception as e:

break

# 开始解析数据

print("开始解析数据")

time.sleep(4)

li_list = driver.find_elements_by_xpath("//*[@class='list_ul']/div/div[2]")

for li in li_list:

print(li.text)

try:

item = {}

anchor = re.split(r"\n", li.text)[0]

item["anchor"] = re.split(r":", anchor)[1]

time.sleep(1)

watch_num = re.split(r"\n", li.text)[2]

item["watch_num"] = (re.match("ñ\d*", watch_num).group())[1:]

time.sleep(1)

item["time"] = re.split(r"\n", li.text)[3]

print(item)

content_list.append(item)

except Exception as e:

print(e)

finally:

pass

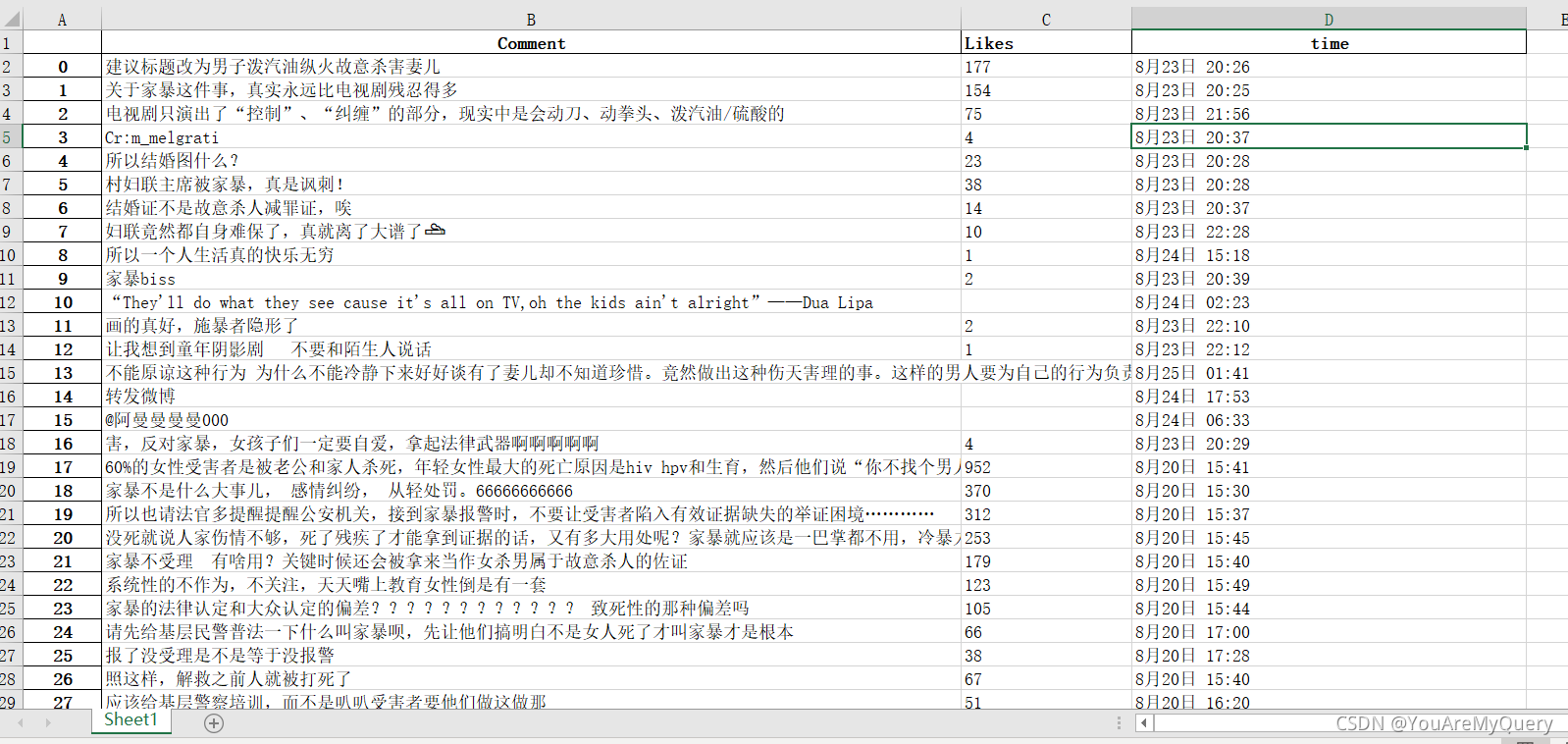

Comment = [z["anchor"] for z in content_list]

Likes = [zz["watch_num"] for zz in content_list]

time = [zzz["time"] for zzz in content_list]

data = pd.DataFrame({'Comment': Comment, 'Likes': Likes,'time':time})

data.to_excel("方洋洋家暴.xlsx")

driver.quit()

上一篇: python爬取微博转发以及转发后的点赞数、转发人信息

下一篇: 显示1s自动隐藏的动画效果