Spark在MaxCompute的运行方式 mavenidea

程序员文章站

2022-06-30 10:13:08

...

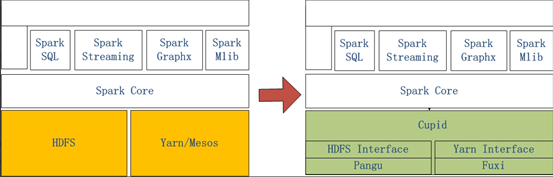

一、Spark系统概述

===========

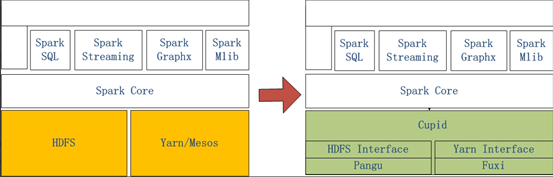

左侧是原生Spark的架构图,右边Spark on MaxCompute运行在阿里云自研的Cupid的平台之上,该平台可以原生支持开源社区Yarn所支持的计算框架,如Spark等。

二、Spark运行在客户端的配置和使用

===================

**2.1打开链接下载客户端到本地**

[http://odps-repo.oss-cn-hangzhou.aliyuncs.com/spark/2.3.0-odps0.30.0/spark-2.3.0-odps0.30.0.tar.gz?spm=a2c4g.11186623.2.12.666a4b69yO8Qur&file=spark-2.3.0-odps0.30.0.tar.gz](https://yq.aliyun.com/go/articleRenderRedirect?url=http%3A%2F%2Fodps-repo.oss-cn-hangzhou.aliyuncs.com%2Fspark%2F2.3.0-odps0.30.0%2Fspark-2.3.0-odps0.30.0.tar.gz%3Fspm%3Da2c4g.11186623.2.12.666a4b69yO8Qur%26amp%3Bfile%3Dspark-2.3.0-odps0.30.0.tar.gz)

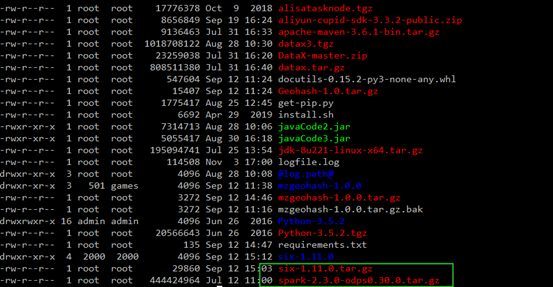

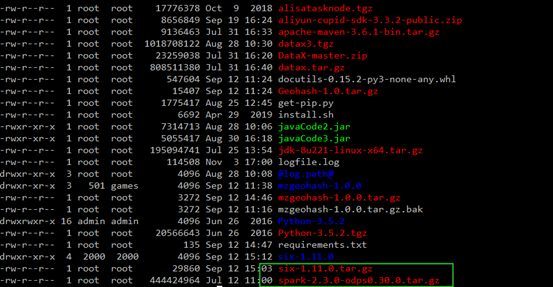

**2.2将文件上传的ECS上**

**2.3将文件解压**

```

tar -zxvf spark-2.3.0-odps0.30.0.tar.gz

```

**2.4配置Spark-default.conf**

```

# spark-defaults.conf

# 一般来说默认的template只需要再填上MaxCompute相关的账号信息就可以使用Spark

spark.hadoop.odps.project.name =

spark.hadoop.odps.access.id =

spark.hadoop.odps.access.key =

# 其他的配置保持自带值一般就可以了

spark.hadoop.odps.end.point = http://service.cn.maxcompute.aliyun.com/api

spark.hadoop.odps.runtime.end.point = http://service.cn.maxcompute.aliyun-inc.com/api

spark.sql.catalogImplementation=odps

spark.hadoop.odps.task.major.version = cupid_v2

spark.hadoop.odps.cupid.container.image.enable = true

spark.hadoop.odps.cupid.container.vm.engine.type = hyper

```

**2.5在github上下载对应代码**

[https://github.com/aliyun/MaxCompute-Spark](https://yq.aliyun.com/go/articleRenderRedirect?url=https%3A%2F%2Fgithub.com%2Faliyun%2FMaxCompute-Spark)

**2.5将代码上传到ECS上进行解压**

```

unzip MaxCompute-Spark-master.zip

```

**2.6将代码打包成jar包(确保安装Maven)**

```

cd MaxCompute-Spark-master/spark-2.x

mvn clean package

```

**2.7查看jar包,并进行运行**

```

bin/spark-submit --master yarn-cluster --class com.aliyun.odps.spark.examples.SparkPi \

MaxCompute-Spark-master/spark-2.x/target/spark-examples_2.11-1.0.0-SNAPSHOT-shaded.jar

```

三、Spark运行在DataWorks的配置和使用

=========================

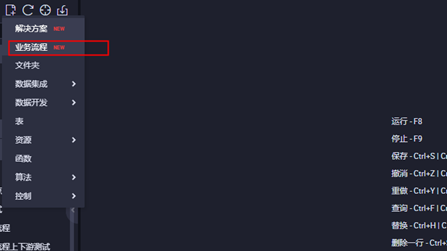

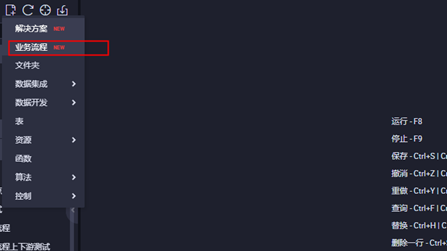

**3.1进入DataWorks控制台界面,点击业务流程**

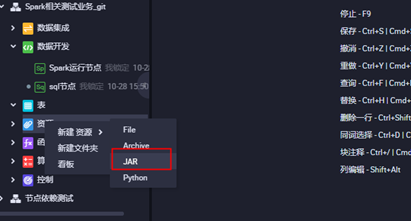

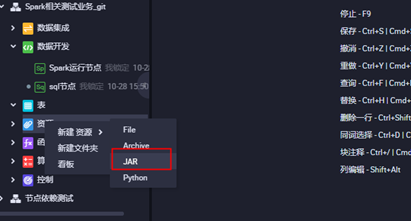

**3.2打开业务流程,创建ODPS Spark节点**

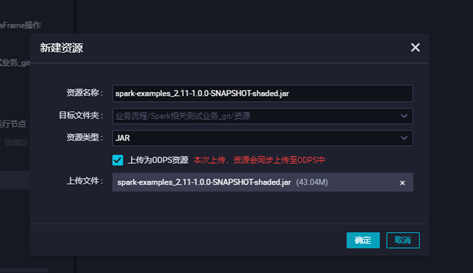

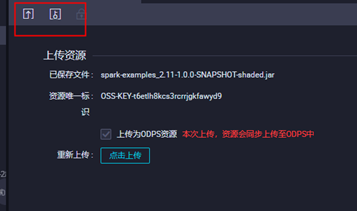

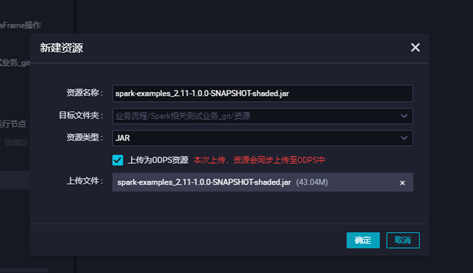

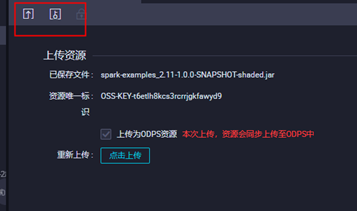

**3.3上传jar包资源,点击对应的jar包上传,并提交**

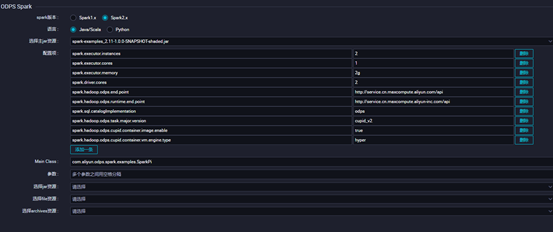

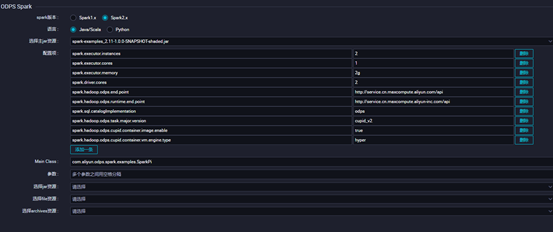

**3.4配置对应ODPS Spark的节点配置点击保存并提交,点击运行查看运行状态**

四、Spark在本地idea测试环境的使用

=====================

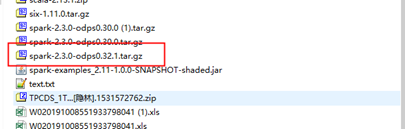

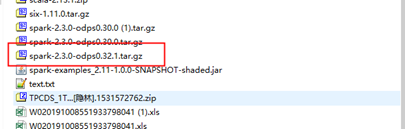

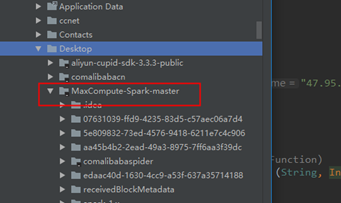

**4.1下载客户端与模板代码并解压**

客户端:

[http://odps-repo.oss-cn-hangzhou.aliyuncs.com/spark/2.3.0-odps0.30.0/spark-2.3.0-odps0.30.0.tar.gz?spm=a2c4g.11186623.2.12.666a4b69yO8Qur&file=spark-2.3.0-odps0.30.0.tar.gz](https://yq.aliyun.com/go/articleRenderRedirect?url=http%3A%2F%2Fodps-repo.oss-cn-hangzhou.aliyuncs.com%2Fspark%2F2.3.0-odps0.30.0%2Fspark-2.3.0-odps0.30.0.tar.gz%3Fspm%3Da2c4g.11186623.2.12.666a4b69yO8Qur%26amp%3Bfile%3Dspark-2.3.0-odps0.30.0.tar.gz)

模板代码:

[https://github.com/aliyun/MaxCompute-Spark](https://yq.aliyun.com/go/articleRenderRedirect?url=https%3A%2F%2Fgithub.com%2Faliyun%2FMaxCompute-Spark)

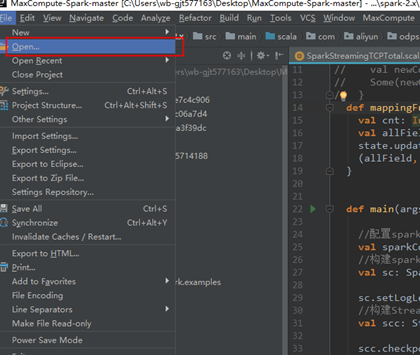

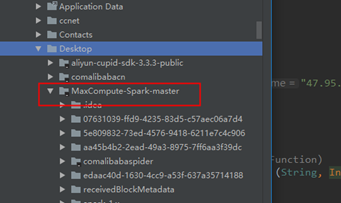

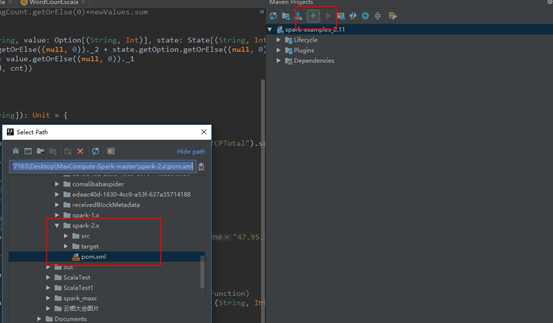

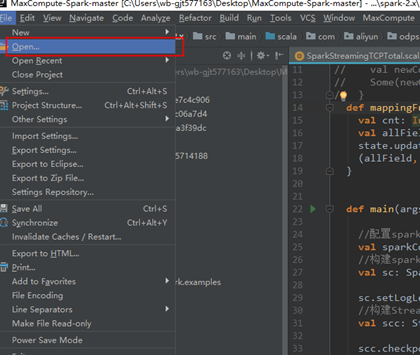

**4.2打开idea,点击Open选择模板代码**

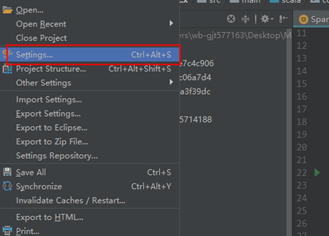

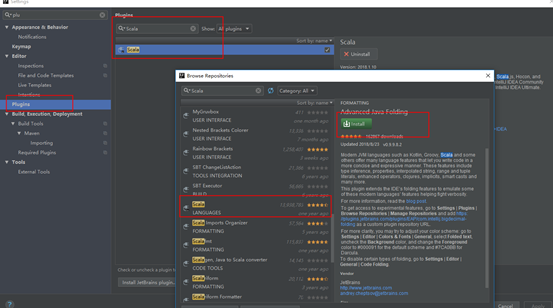

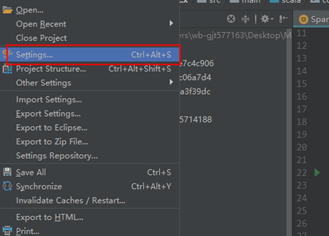

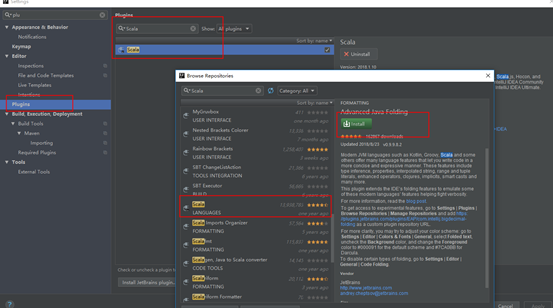

**4.2安装Scala插件**

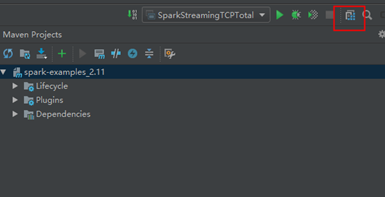

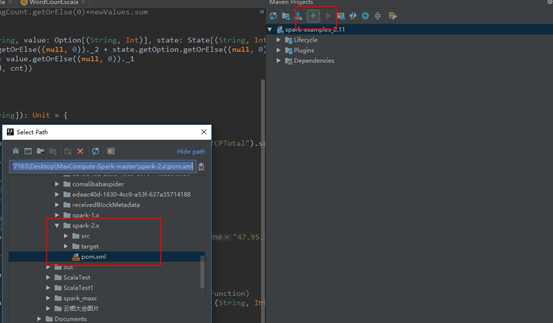

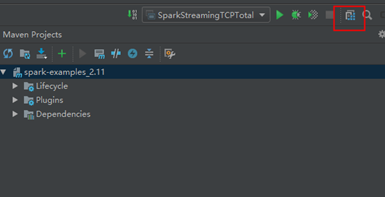

**4.3配置maven**

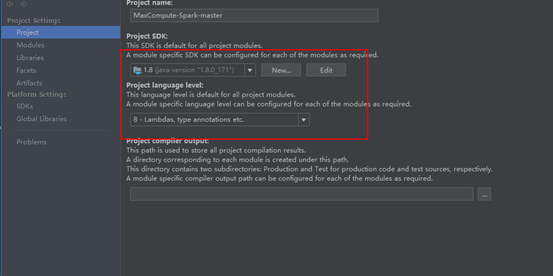

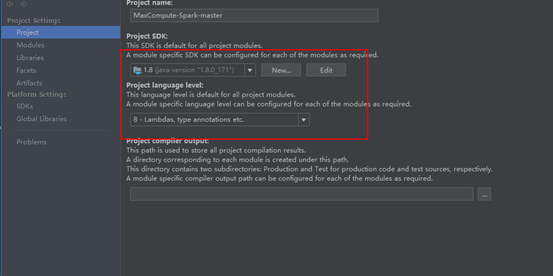

**4.4配置JDK和相关依赖**

===========

左侧是原生Spark的架构图,右边Spark on MaxCompute运行在阿里云自研的Cupid的平台之上,该平台可以原生支持开源社区Yarn所支持的计算框架,如Spark等。

二、Spark运行在客户端的配置和使用

===================

**2.1打开链接下载客户端到本地**

[http://odps-repo.oss-cn-hangzhou.aliyuncs.com/spark/2.3.0-odps0.30.0/spark-2.3.0-odps0.30.0.tar.gz?spm=a2c4g.11186623.2.12.666a4b69yO8Qur&file=spark-2.3.0-odps0.30.0.tar.gz](https://yq.aliyun.com/go/articleRenderRedirect?url=http%3A%2F%2Fodps-repo.oss-cn-hangzhou.aliyuncs.com%2Fspark%2F2.3.0-odps0.30.0%2Fspark-2.3.0-odps0.30.0.tar.gz%3Fspm%3Da2c4g.11186623.2.12.666a4b69yO8Qur%26amp%3Bfile%3Dspark-2.3.0-odps0.30.0.tar.gz)

**2.2将文件上传的ECS上**

**2.3将文件解压**

```

tar -zxvf spark-2.3.0-odps0.30.0.tar.gz

```

**2.4配置Spark-default.conf**

```

# spark-defaults.conf

# 一般来说默认的template只需要再填上MaxCompute相关的账号信息就可以使用Spark

spark.hadoop.odps.project.name =

spark.hadoop.odps.access.id =

spark.hadoop.odps.access.key =

# 其他的配置保持自带值一般就可以了

spark.hadoop.odps.end.point = http://service.cn.maxcompute.aliyun.com/api

spark.hadoop.odps.runtime.end.point = http://service.cn.maxcompute.aliyun-inc.com/api

spark.sql.catalogImplementation=odps

spark.hadoop.odps.task.major.version = cupid_v2

spark.hadoop.odps.cupid.container.image.enable = true

spark.hadoop.odps.cupid.container.vm.engine.type = hyper

```

**2.5在github上下载对应代码**

[https://github.com/aliyun/MaxCompute-Spark](https://yq.aliyun.com/go/articleRenderRedirect?url=https%3A%2F%2Fgithub.com%2Faliyun%2FMaxCompute-Spark)

**2.5将代码上传到ECS上进行解压**

```

unzip MaxCompute-Spark-master.zip

```

**2.6将代码打包成jar包(确保安装Maven)**

```

cd MaxCompute-Spark-master/spark-2.x

mvn clean package

```

**2.7查看jar包,并进行运行**

```

bin/spark-submit --master yarn-cluster --class com.aliyun.odps.spark.examples.SparkPi \

MaxCompute-Spark-master/spark-2.x/target/spark-examples_2.11-1.0.0-SNAPSHOT-shaded.jar

```

三、Spark运行在DataWorks的配置和使用

=========================

**3.1进入DataWorks控制台界面,点击业务流程**

**3.2打开业务流程,创建ODPS Spark节点**

**3.3上传jar包资源,点击对应的jar包上传,并提交**

**3.4配置对应ODPS Spark的节点配置点击保存并提交,点击运行查看运行状态**

四、Spark在本地idea测试环境的使用

=====================

**4.1下载客户端与模板代码并解压**

客户端:

[http://odps-repo.oss-cn-hangzhou.aliyuncs.com/spark/2.3.0-odps0.30.0/spark-2.3.0-odps0.30.0.tar.gz?spm=a2c4g.11186623.2.12.666a4b69yO8Qur&file=spark-2.3.0-odps0.30.0.tar.gz](https://yq.aliyun.com/go/articleRenderRedirect?url=http%3A%2F%2Fodps-repo.oss-cn-hangzhou.aliyuncs.com%2Fspark%2F2.3.0-odps0.30.0%2Fspark-2.3.0-odps0.30.0.tar.gz%3Fspm%3Da2c4g.11186623.2.12.666a4b69yO8Qur%26amp%3Bfile%3Dspark-2.3.0-odps0.30.0.tar.gz)

模板代码:

[https://github.com/aliyun/MaxCompute-Spark](https://yq.aliyun.com/go/articleRenderRedirect?url=https%3A%2F%2Fgithub.com%2Faliyun%2FMaxCompute-Spark)

**4.2打开idea,点击Open选择模板代码**

**4.2安装Scala插件**

**4.3配置maven**

**4.4配置JDK和相关依赖**

推荐阅读

-

在IIS服务器上以CGI方式运行Python脚本的教程

-

Spark在MaxCompute的运行方式 mavenidea

-

spark任务在executor端的运行过程分析

-

spark任务运行完成后在driver端的处理逻辑

-

为什么在 cmd 和 powershell 中运行 .py 的方式不同?

-

在IIS服务器上以CGI方式运行Python脚本的教程

-

为什么在 cmd 和 powershell 中运行 .py 的方式不同?

-

CGI和servlet运行方式本质的区别是什么?PHP和Java在Web开发的原理有哪些本质不同?

-

在IIS服务器上以CGI方式运行Python脚本的教程

-

分享在IIS上用CGI方式运行Python脚本的实例教程